Benefits of IaC Testing

Testing IaC offers several benefits, and understanding the value of testing at different stages of development can help you maximize its impact.

Customer Satisfaction

Testing ensures that the infrastructure meets user requirements and works as intended. A well-tested IaC implementation leads to consistent, repeatable deployments, minimizing user frustrations caused by unexpected errors or downtime. For example, tests can validate that an infrastructure module deploys the correct resources with the expected configurations, fulfilling the customer's needs seamlessly.

Dependability and Repeatability

IaC testing ensures that the same code produces consistent infrastructure across different environments. Whether deploying in development, staging, or production, testing confirms that infrastructure behaves predictably, reducing the risk of configuration drift or unexpected inconsistencies.

Security and Compliance

Testing helps identify vulnerabilities, misconfigurations, and violations of security policies before infrastructure reaches production. Automated tools can scan for compliance with industry standards, such as SOC 2, HIPAA, or internal organizational policies. This proactive approach reduces the risk of security breaches and audit failures.

Quicker and Safer Changes

With a comprehensive suite of tests, teams can implement changes with confidence. Regression tests ensure that new updates don’t inadvertently break existing infrastructure. By automating these tests, teams can deploy infrastructure updates faster while maintaining reliability.

Audit Capability

Testing adds traceability to your IaC workflow, providing a clear record of what was tested, what passed, and what failed. This audit trail is invaluable for compliance reviews and debugging. It also demonstrates that your team follows robust processes for verifying infrastructure before deployment.

Production Failures Prevention

Without testing, issues often surface in production, where their impact can be significant and costly. Testing ensures bugs are caught in earlier stages, saving time and resources by preventing expensive downtime or service disruptions.

Testing at Different Stages of Development

To ensure the reliability, security, and functionality of Infrastructure as Code, testing must be integrated throughout its lifecycle. Each stage of testing focuses on specific aspects of the code, allowing teams to identify and address issues early before they escalate into larger problems. By applying a layered testing approach, organizations can create a robust and dependable infrastructure pipeline while maintaining agility in development and deployment.

Testing IaC involves multiple stages, each with distinct goals and tools:

- Static Analysis: The first step, performed before any code is executed, involves validating the syntax, structure, and compliance of the IaC code. This ensures foundational correctness and adherence to best practices.

- Unit Testing: At this stage, individual components or modules are tested in isolation to confirm that they behave as expected under specific conditions, such as verifying logic and input validations.

- Integration Testing: Moving beyond isolated modules, integration testing ensures that all components work together correctly after the infrastructure is applied, identifying issues like permissions errors or resource dependencies.

- End-to-End Testing: In production-like environments, end-to-end testing validates the entire infrastructure stack by simulating real-world use cases to confirm that it performs as intended under expected conditions.

- Continuous Monitoring and Audit Testing: After deployment, continuous checks are essential to ensure infrastructure remains compliant, secure, and aligned with organizational standards over time.

This multi-stage approach provides a comprehensive safety net, catching issues at the earliest opportunity and ensuring that each layer of testing builds on the confidence established by the previous one. In the following sections, we’ll explore these stages in detail and highlight the tools and strategies that make testing IaC efficient, effective, and scalable.

Types of IaC Testing

Static Analysis

Static analysis is a foundational step in ensuring the quality and correctness of your infrastructure as code (IaC). This process involves checking your code for syntactic and structural issues before it is executed. Tools like terraform validate, TFLint, TFsec, Checkov, and others are designed to perform these checks efficiently, providing varying levels of scrutiny.

At the most basic level, the terraform validate command verifies whether the code is syntactically correct and properly structured for Terraform. It ensures that variables are defined, syntax is valid, and no critical elements like curly braces are missing. While this is a minimal level of testing, it serves as a good starting point.

To go beyond basic syntax validation, tools such as TFLint, TFsec, and Checkov offer more advanced static analysis. These tools not only validate syntax but also detect common configuration mistakes and security vulnerabilities. They read your code, identify issues, and provide actionable feedback. Their lightweight nature makes them an excellent fit for integration into CI/CD pipelines, where they can run in seconds and catch errors early in the development cycle.

A more advanced static analysis approach involves evaluating your code's planned output. Tools like HashiCorp Sentinel and terraform-compliance operate at this level. These tools analyze the results of the terraform plan command, offering an additional layer of validation by examining the planned resource changes. While they are fast and easy to use, their ability to detect issues is inherently limited to what can be inferred from the planned output.

The key takeaway is that static analysis provides a quick, effective way to identify errors and enforce best practices in your infrastructure code. If you're not performing any testing at all, incorporating static analysis is an essential first step toward improving the reliability and security of your IaC workflows.

Unit Testing

Unit testing is an essential practice in IaC that focuses on validating aspects of your configuration that are knowable at plan time. This includes modeling different combinations of input values, verifying the behavior of conditionals and logic, and ensuring that expected outcomes are achieved. By running these tests at the planning stage, you can validate elements such as string interpolations and detect unintended consequences of refactoring before applying the configuration.

Another key aspect of unit testing is testing for scenarios where failures are expected. For instance, you can use the expect_failures attribute to validate that input variable checks, preconditions, and other safeguards properly reject invalid inputs. This type of testing flips the typical logic of success and failure: if an invalid input doesn't trigger a failure, the test itself fails. This approach ensures that your code is robust against misconfigurations and improper usage. Below I present some examples of unit test utilization.

Validating Module Logic

Unit tests are particularly useful for testing Terraform modules by simulating various input scenarios and verifying expected outputs. This ensures that conditionals, variable constraints, and interpolations work correctly.

Example: Testing Conditional Logic in Terraform

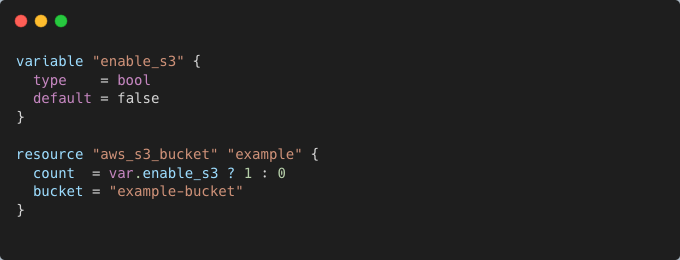

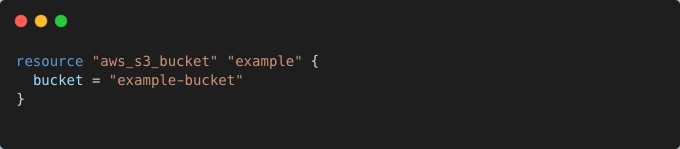

A Terraform module might conditionally create an S3 bucket based on a variable:

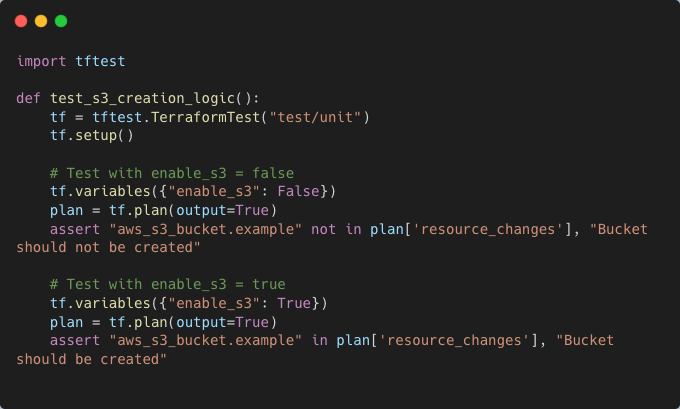

A unit test can validate whether the module correctly handles different values for enable_s3:

Ensuring Input Validation

Unit testing also verifies that input variables meet predefined constraints, ensuring that Terraform configurations reject invalid values.

Example: Enforcing Input Constraints

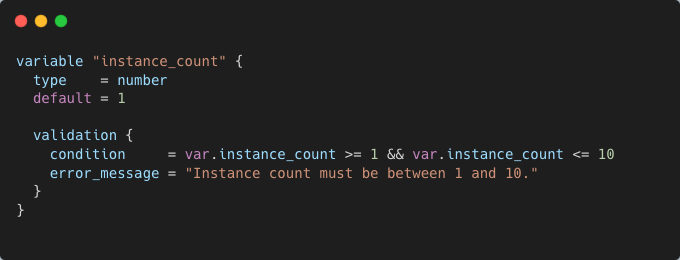

Terraform allows defining constraints on input variables, such as enforcing minimum/maximum values:

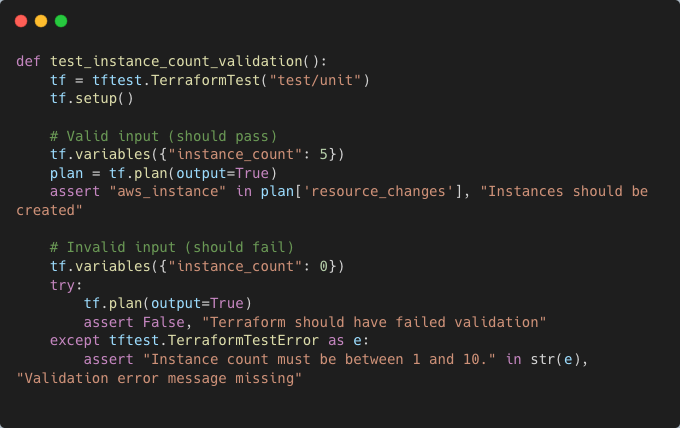

A unit test can confirm that Terraform enforces this validation:

Testing Failures with expect_failures

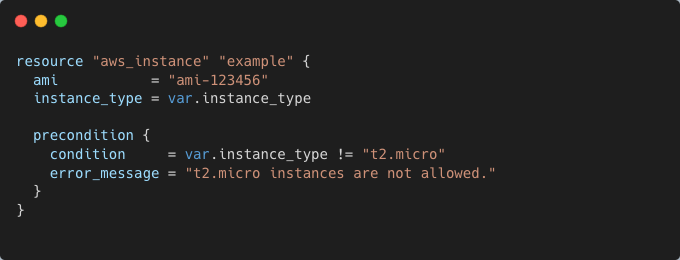

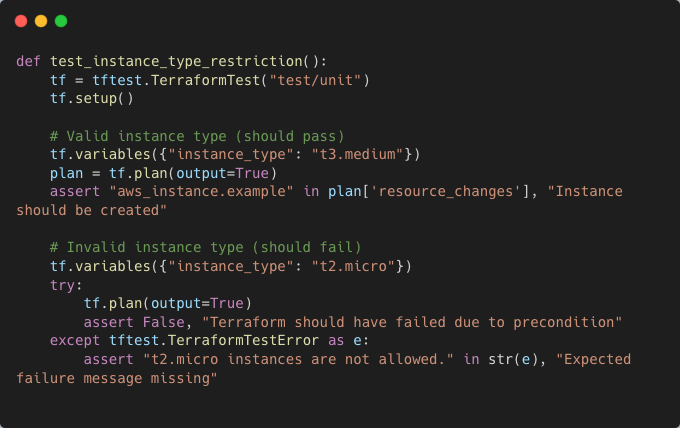

Unit tests can also validate expected failures, ensuring that Terraform configurations correctly reject misconfigurations. Terraform provides built-in mechanisms for defining preconditions and postconditions to enforce business logic.

Example: Testing Failure Cases

A unit test can check that applying this configuration with a restricted instance type fails as expected:

By incorporating unit tests at this level, teams can ensure that Terraform modules function correctly before deployment. Unit testing provides early validation of logic, input constraints, and expected failures, making infrastructure code more reliable, maintainable, and resilient to future changes.

Integration Testing

Integration testing addresses use cases that cannot be validated until after the terraform apply step. Unlike a terraform plan, which provides a static preview of changes, integration tests verify runtime behaviors and outcomes that are only determined during the actual application of the infrastructure. These include verifying the values of calculated attributes and outputs, which are resolved only at apply time, and detecting apply-time errors such as missing permissions, unavailable APIs, or unenabled features in your cloud account. Additionally, issues like invalid YAML or JSON in policies or job specifications, which may appear syntactically correct in a plan, can be caught during integration testing when they are applied to actual resources.

Another critical use case for integration testing is interoperability testing, which ensures that your infrastructure behaves consistently across different cloud regions or partitions, such as commercial regions versus GovCloud. Integration tests can also validate the impact of changes to external dependencies, such as new versions of Terraform, updated providers, or changes to child modules. By running tests across regions, partitions, and various dependency versions, integration testing provides confidence that your infrastructure operates reliably under diverse conditions and configurations. Here, we’ll dive deeper into technical specifics and illustrate integration testing with examples.

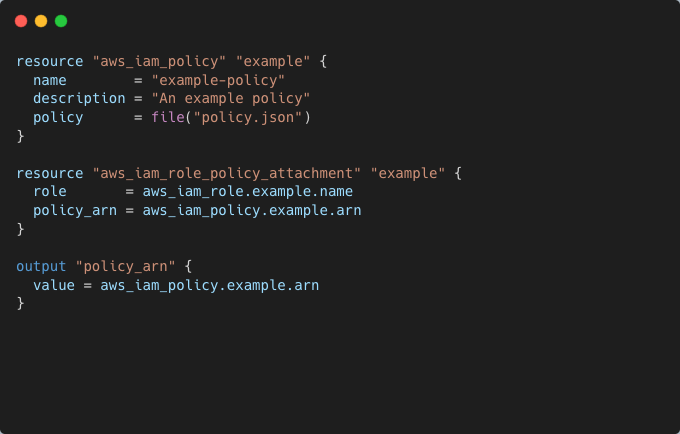

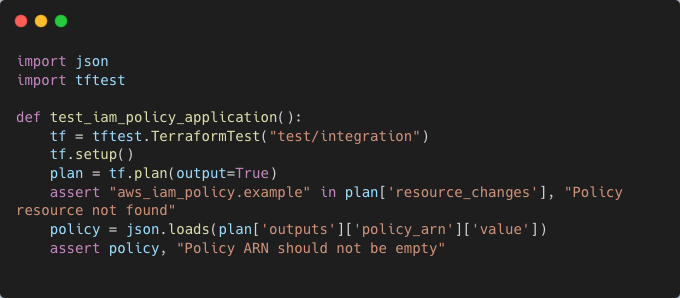

Runtime Validation

One of the primary goals of integration testing is to validate runtime attributes, such as calculated outputs, resource dependencies, and final configurations. For example, you might have an IAM policy defined in JSON, that appears valid during a terraform plan but fails to attach due to structural issues in the actual JSON data. Testing this scenario ensures such failures are caught during the testing phase.

Example: Verifying IAM Policy Application

During integration testing, a test script can confirm that the policy.json file is correctly structured and can attach without errors:

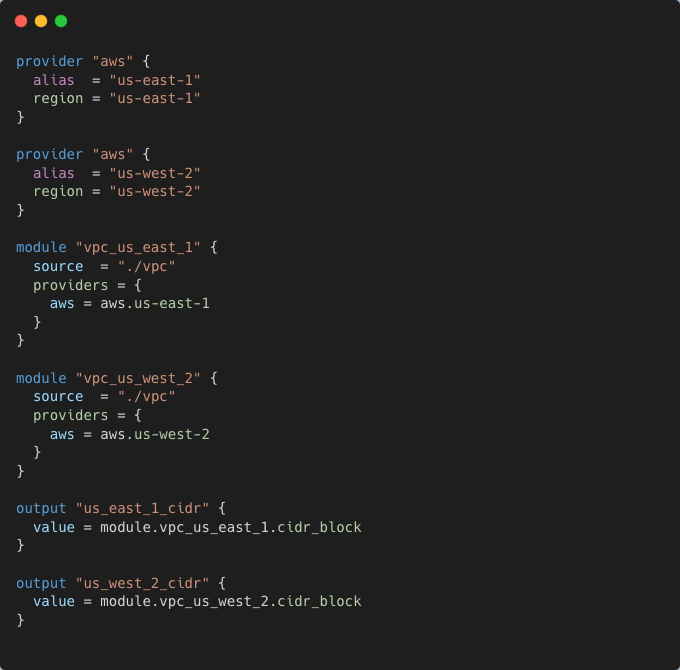

Interoperability TestingIntegration tests can identify inconsistencies across regions or partitions, such as differences between commercial cloud environments and GovCloud. For instance, certain AWS services may not be available in all regions. Running tests in multiple environments ensures compatibility and highlights gaps in resource availability.

Example: Testing Across Regions

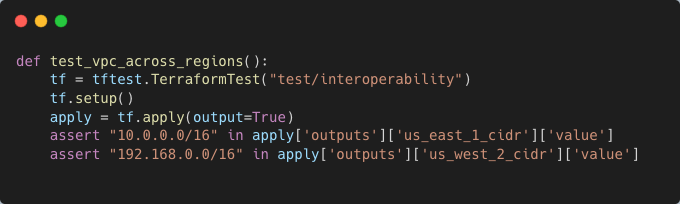

A test might deploy a VPC in two regions and validate network settings:

The integration test can assert that both VPCs are created successfully and have the expected CIDR ranges:

Dependency Validation

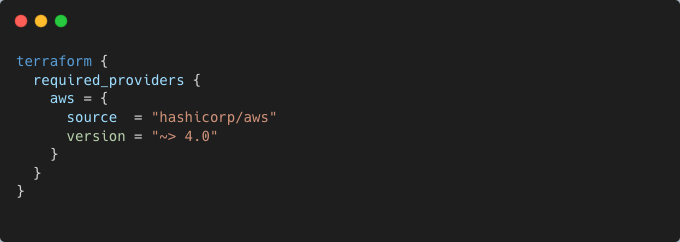

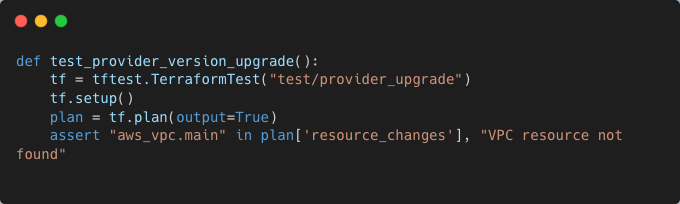

Integration tests also validate that updates to dependencies, such as new Terraform versions, provider updates, or child module changes, do not break the infrastructure. For example, when upgrading a Terraform provider, tests can verify that all resources remain functional and no deprecations disrupt the workflow.

Example: Testing with Updated Provider Versions

Test the new provider version to ensure compatibility:

Error Scenarios

Integration tests are particularly effective in catching apply-time errors such as missing permissions, disabled APIs, or quota limits. For example, testing might reveal that an S3 bucket creation fails because the s3:CreateBucket permission is missing from the attached IAM role.

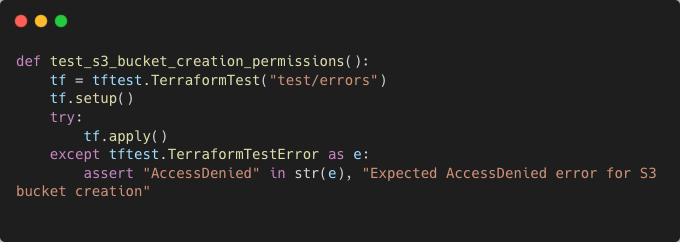

Example: Verifying Apply-Time Permissions

Integration testing can simulate this scenario and detect missing permissions:

By leveraging these techniques and tools, integration testing provides a deep level of validation, ensuring that infrastructure behaves as expected under real-world conditions. It not only catches runtime issues but also builds confidence in your infrastructure's interoperability and adaptability across different environments and dependency changes.

Tools

Validation in Terraform

Terraform has steadily introduced features to enhance validation capabilities, enabling more rigorous checks at various stages of the IaC lifecycle:

- v0.13 – Input Variable Validation: This feature enforces specific requirements on input variables, such as ensuring that a string meets a length requirement or that a numeric value is above a certain threshold.

- v1.2 – Pre- and Post-Conditions: These allow for custom rules on resources, data sources, and outputs. For example, you can validate that a user-provided AMI has the correct architecture or type before applying the configuration.

- v1.5 – Check Blocks: Introduced as a top-level block, check blocks enable you to validate your infrastructure as a whole. For example, after deploying your computing, storage, and networking resources, you can write a check block to ensure that your application is up and responding as expected.

These validation features provide a comprehensive toolkit for enforcing correctness and preventing errors throughout the infrastructure deployment process.

Terraform Test Framework

The Terraform test framework, introduced in v1.6, addresses two fundamental questions: Does the code render properly? Do the created resources function as expected? While tools like terraform validate handle syntax and logic validation, the test framework extends this functionality by providing native support for unit and integration testing without requiring third-party tools like TerraTest or Kitchen-Terraform.

The test framework allows you to define tests declaratively using HCL, avoiding the need to learn additional programming languages like Go or Ruby. Tests are structured around run actions, which execute terraform plan or terraform apply commands. Each run contains assertions to check conditions and log results. A test passes if all assertions succeed; otherwise, it fails.

In addition to basic validation, the test framework supports advanced functionality, such as specifying input variable values for test runs, defining provider configurations for individual tests or entire suites, and supplying mock data for resources and data sources (introduced in v1.7). Tests can be executed for the entire configuration or targeted subsets based on directory or file names, providing flexibility in how you validate your IaC.

By incorporating these testing and validation practices, you can significantly enhance the reliability, security, and maintainability of your infrastructure code.